The session covered the update step of the algorithm as shown in the sessions structure.

The session started with some basic notions of Bayesian probability. We focus in particular in the link between probability and logic. This took us to the classical syllogism and the weaker ones that better represent the way humans do inference. The source for this part of the workshop was the book by Jaynes: “Probability: the logic of science”. A recommended read!

After this we looked at the prediction step, that we discussed in the previous session, from a probabilistic perspective. Next, we presented the update step as an application of Bayes rule. Finally, after introducing some identities, we gave the explicit formulas for the Kalman filter.

I am not totally happy about how I presented the transition from probabilities to the prediction and update steps. I think the jump was too rough. There is need of a couple of intermediate slides to soften the transition. Maybe a simple example with 2 or 3 points.

Also, I underestimated the time the participants require to implement the three basic functions of the Kalman filter. I had estimated 30 minutes, but it took almost 1 hour, and not everybody succeeded.

The next iteration of the workshop will improve these issues!

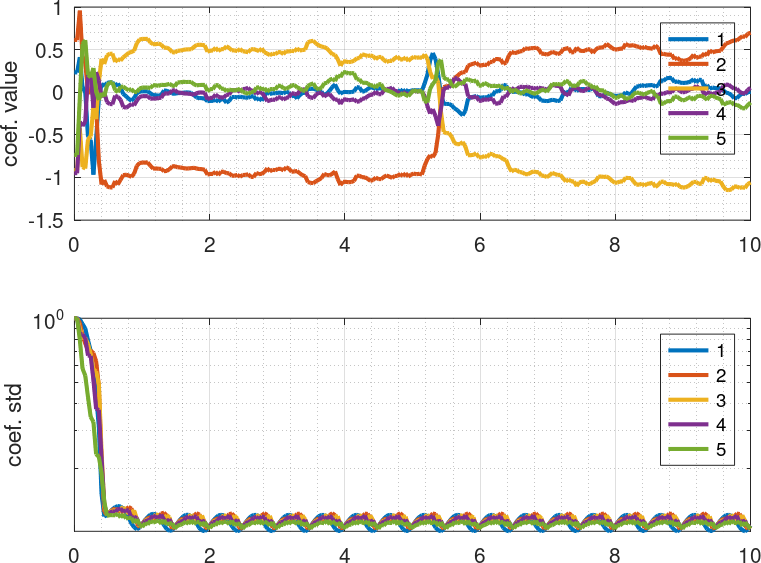

We then moved to filtering data using data-driven models. I think this was very interesting for many. The highlight was doing some basic frequency tracking with a discrete Fourier basis. We had data generated by linear combinations of sine functions, at some unknown point in time, the linear combination changes the coefficients abruptly (frequencies 2 and 3 exchange coefficients). A simple filter with drifting coefficients manages to detect and track the changes:

It is a pity that I did not have the planned time for this part. I will repeat a fraction of this in the recap if the next session.

Slides

To download a PDF file with the slides and notes click the image below!